|

|

@@ -46,6 +46,10 @@ see TestManagement below

|

|

|

|

|

|

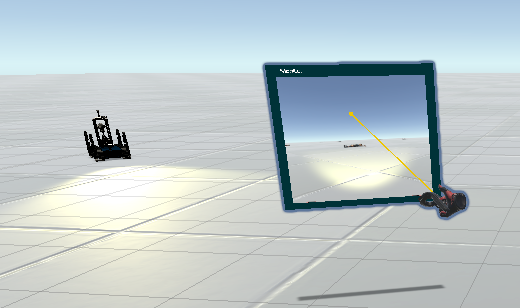

The user can control the robot directly by operating the handle

|

|

|

|

|

|

+<img src="https://raw.githubusercontent.com/elaineJJY/Storage/main/Picture/20210719141931.png" alt="handle1" style="zoom: 50%;" />

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

##### 2. Lab

|

|

|

|

|

|

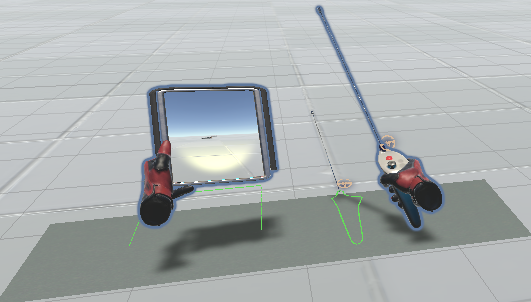

The environment is designed to resemble a real laboratory environment as closely as possible, and is operated by holding the joystick or clicking on buttons with the virtual hand.

|

|

|

@@ -58,13 +62,15 @@ The part that involves virtual joystick movement and button effects uses an open

|

|

|

|

|

|

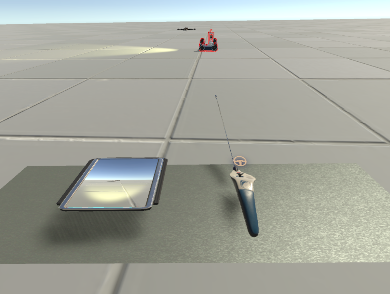

In this mode the user can pick up the tools of operation manually: for example the remote control. Alternatively, the target point can be set directly from the right hand. The robot will automatically run to the set target point.

|

|

|

|

|

|

-

|

|

|

+

|

|

|

|

|

|

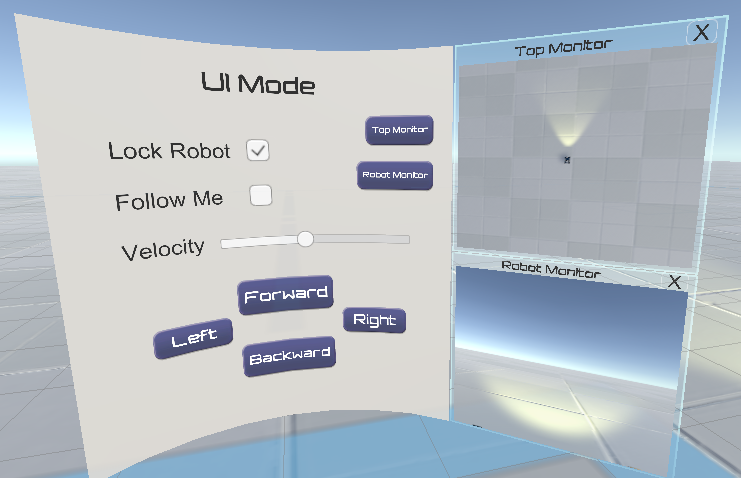

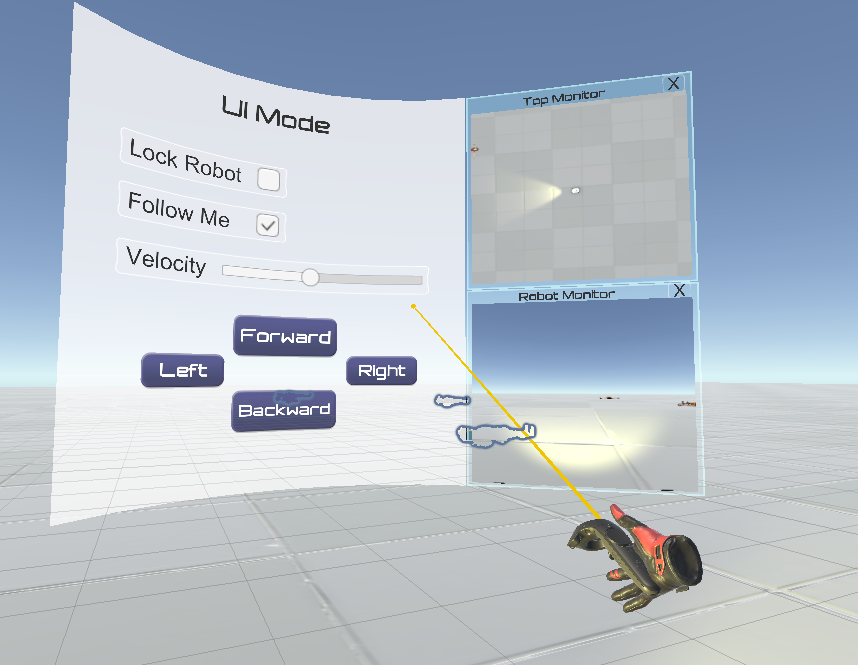

##### 4. UI Mode

|

|

|

|

|

|

In this mode the user must use the rays emitted by the handle to manipulate the robot. By clicking on the direction button, the user can control the direction of movement of the robot. In addition to this, the user can also turn on the follow function, in which case the robot will always follow the user's position in the virtual world.

|

|

|

|

|

|

-

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

|

|

|

|

|

|

|