90 ändrade filer med 190 tillägg och 7 borttagningar

BIN

.DS_Store

BIN

Concepts for operating ground based rescue robots using virtual reality/.DS_Store

BIN

Concepts for operating ground based rescue robots using virtual reality/Concepts_for_operating_ground_based_rescue_robots_using_virtual_reality.pdf

+ 3

- 0

Concepts for operating ground based rescue robots using virtual reality/Thesis_Jingyi.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 76

- 4

Concepts for operating ground based rescue robots using virtual reality/chapters/implementation.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 111

- 1

Concepts for operating ground based rescue robots using virtual reality/chapters/result.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/I found it easy to concentrate on controlling the robot.jpg

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/I found it easy to move robot in desired position.jpg

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/I found it easy to perceive the details of the environment.jpg

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/Rescue situation.png

BIN

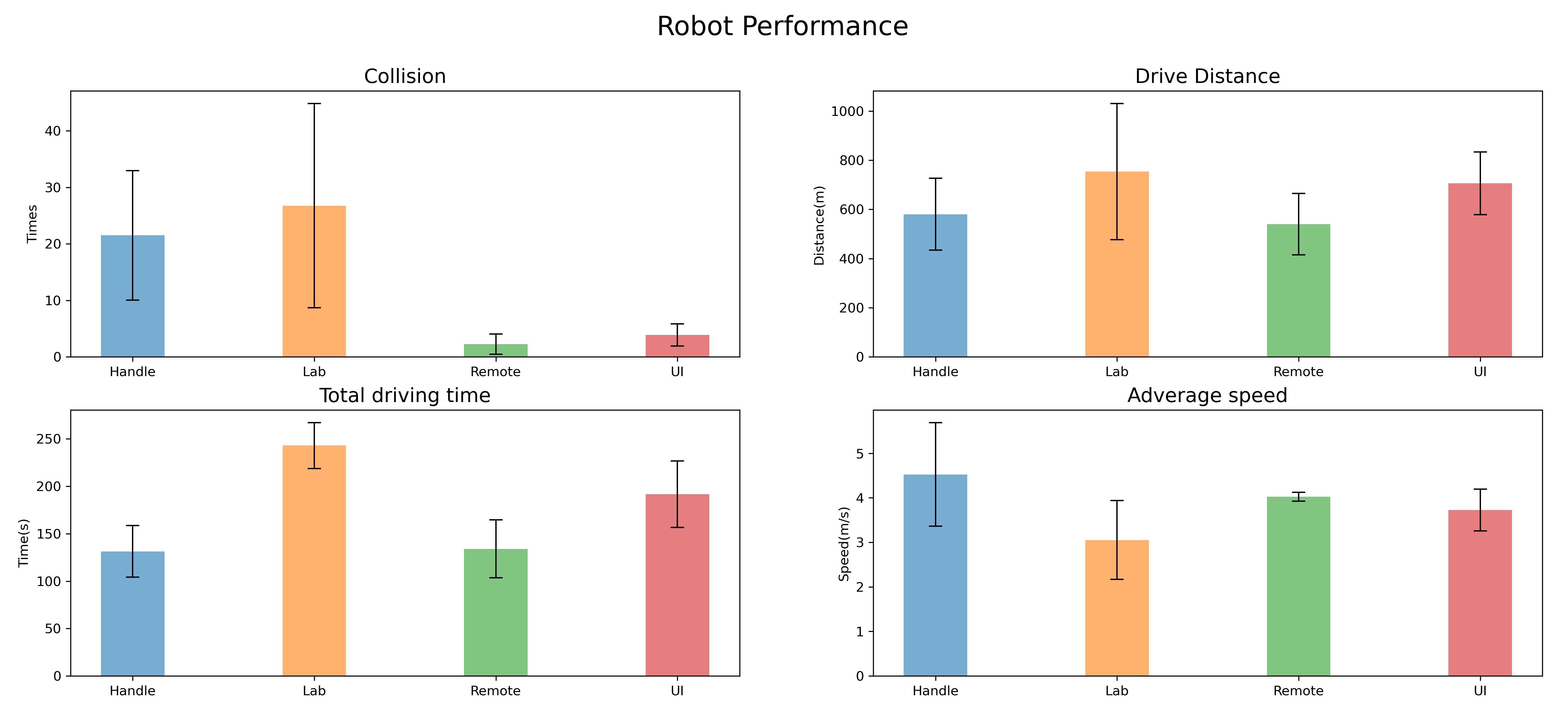

Concepts for operating ground based rescue robots using virtual reality/graphics/Robot Performance.png

BIN

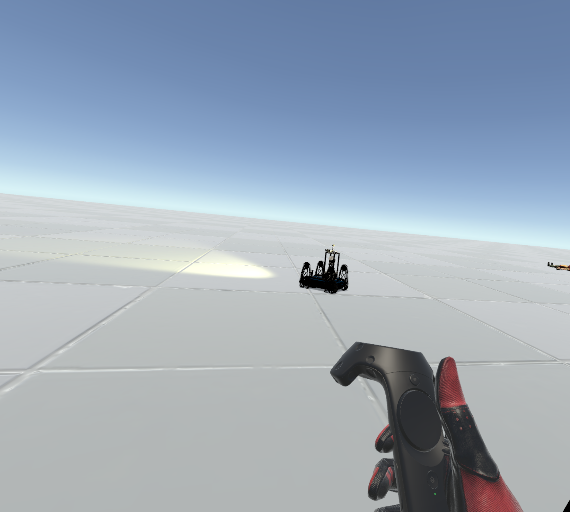

Concepts for operating ground based rescue robots using virtual reality/graphics/handle1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/handle2.png

BIN

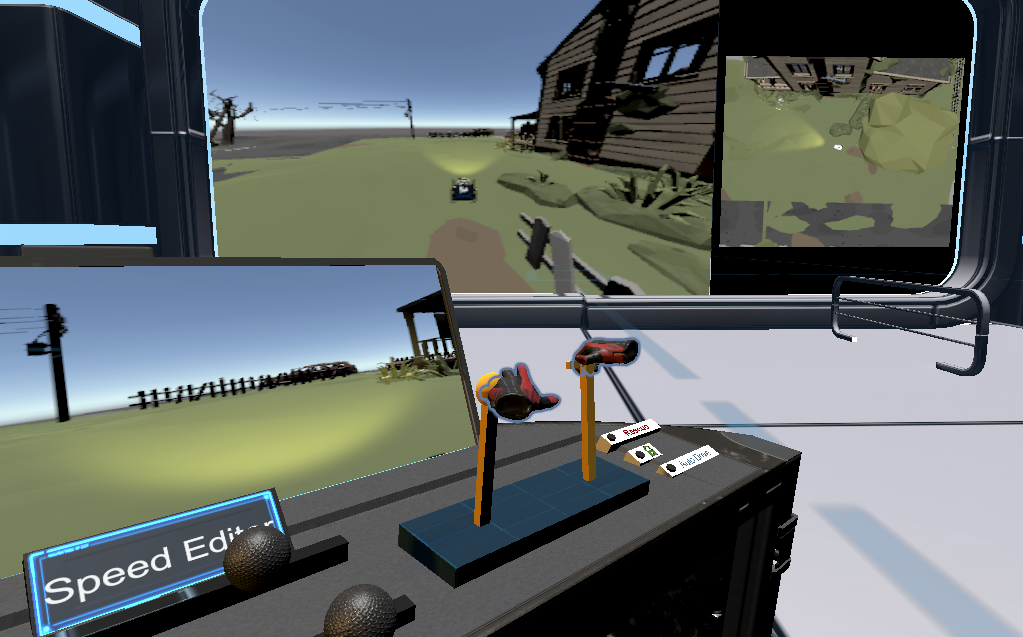

Concepts for operating ground based rescue robots using virtual reality/graphics/lab1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/lab3.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/lab4.png

BIN

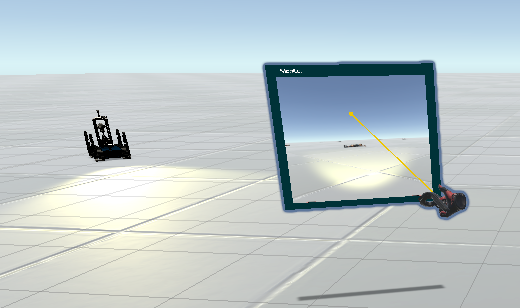

Concepts for operating ground based rescue robots using virtual reality/graphics/remote1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/remote2.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/remote3.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/remote4.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/robot1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/robot2.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/robot3.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/robot4.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/summary.jpg

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testCollider.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testCollider2.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testVictim1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testVictim2.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testVictim3.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/testVictim4.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/total.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/ui1.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/ui2.png

BIN

Concepts for operating ground based rescue robots using virtual reality/graphics/ui3.png

BIN

User Study/.DS_Store

BIN

User Study/Einverstaendnis.pdf

BIN

User Study/Photo/.DS_Store

BIN

User Study/Photo/Snipaste_2021-07-10_14-59-39.png

BIN

User Study/Photo/Snipaste_2021-07-10_16-08-44.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-03-50.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-24-14.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-24-36.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-24-58.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-36-48.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-37-54.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-44-25.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-44-30.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-44-58.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-45-05.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-46-06.png

BIN

User Study/Photo/Snipaste_2021-07-10_17-52-09.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-30-39.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-33-13.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-33-35.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-34-20.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-34-40.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-35-37.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-46-13.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-46-56.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-50-44.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-51-21.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-51-33.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-51-57.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-53-11.png

BIN

User Study/Photo/Snipaste_2021-07-17_18-53-26.png

BIN

User Study/Photo/handle1.png

BIN

User Study/Photo/handle2.png

BIN

User Study/Photo/lab1.png

BIN

User Study/Photo/lab3.png

BIN

User Study/Photo/lab4.png

BIN

User Study/Photo/remote1.png

BIN

User Study/Photo/remote2.png

BIN

User Study/Photo/remote3.png

BIN

User Study/Photo/remote4.png

BIN

User Study/Photo/remote5.png

BIN

User Study/Photo/robot1.png

BIN

User Study/Photo/robot2.png

BIN

User Study/Photo/robot3.png

BIN

User Study/Photo/robot4.png

BIN

User Study/Photo/testCollider.png

BIN

User Study/Photo/testCollider2.png

BIN

User Study/Photo/testVictim1.png

BIN

User Study/Photo/testVictim2.png

BIN

User Study/Photo/testVictim3.png

BIN

User Study/Photo/testVictim4.png

BIN

User Study/Photo/ui1.png

BIN

User Study/Photo/ui2.png

BIN

User Study/Photo/ui3.png

+ 0

- 2

User Study/Procedure.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||