47 changed files with 168 additions and 31 deletions

BIN

.DS_Store

BIN

Hector_v2/.DS_Store

BIN

Hector_v2/Assets/.DS_Store

BIN

Hector_v2/Library/.DS_Store

BIN

Hector_v2/obj/.DS_Store

+ 35

- 4

Thesis.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

User Study/.DS_Store

BIN

User Study/Google Form/.DS_Store

+ 10

- 3

User Study/Google Form/Hector V2 Nutzerstudie.csv

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

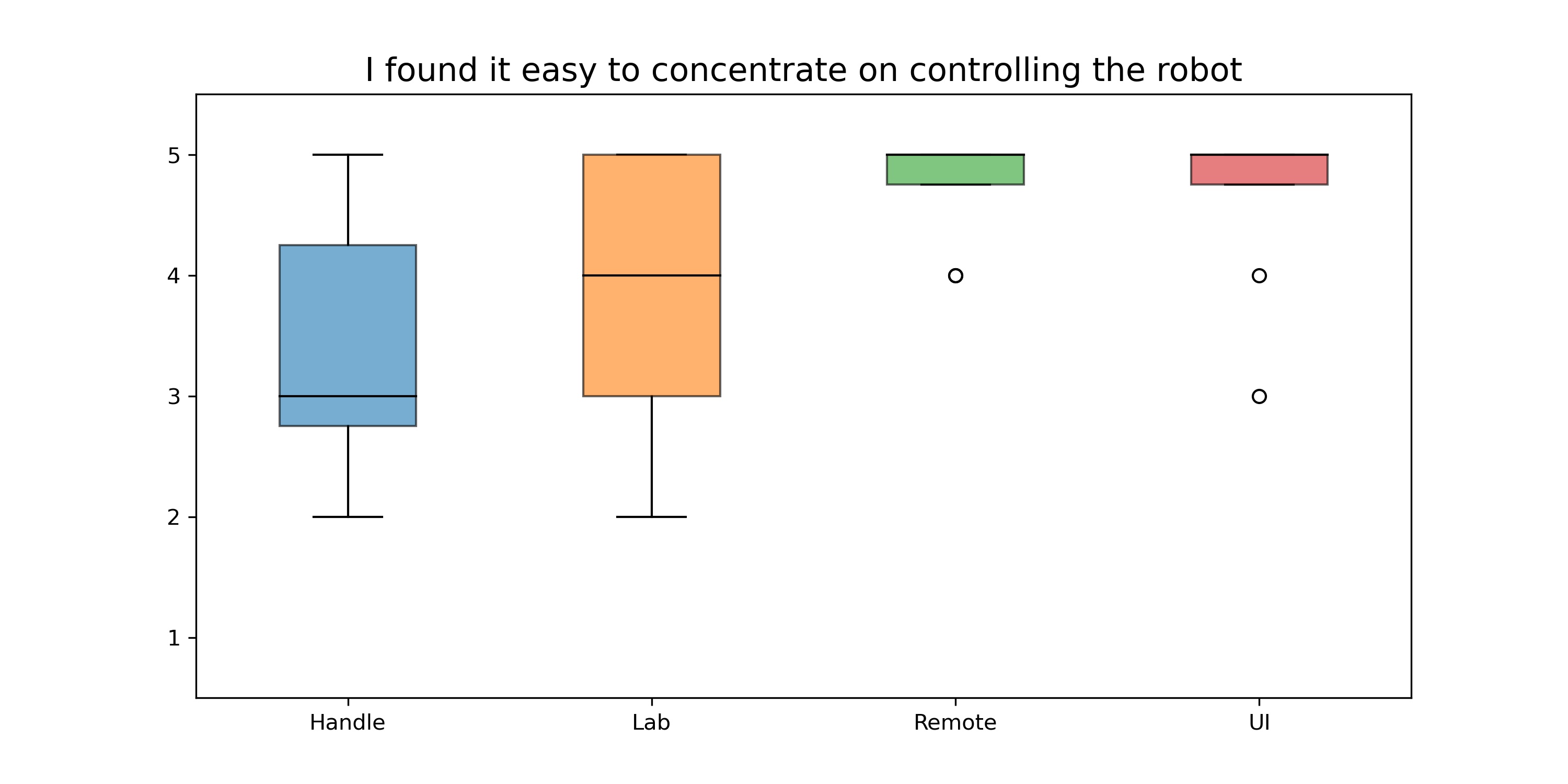

User Study/Google Form/I found it easy to concentrate on controlling the robot.jpg

BIN

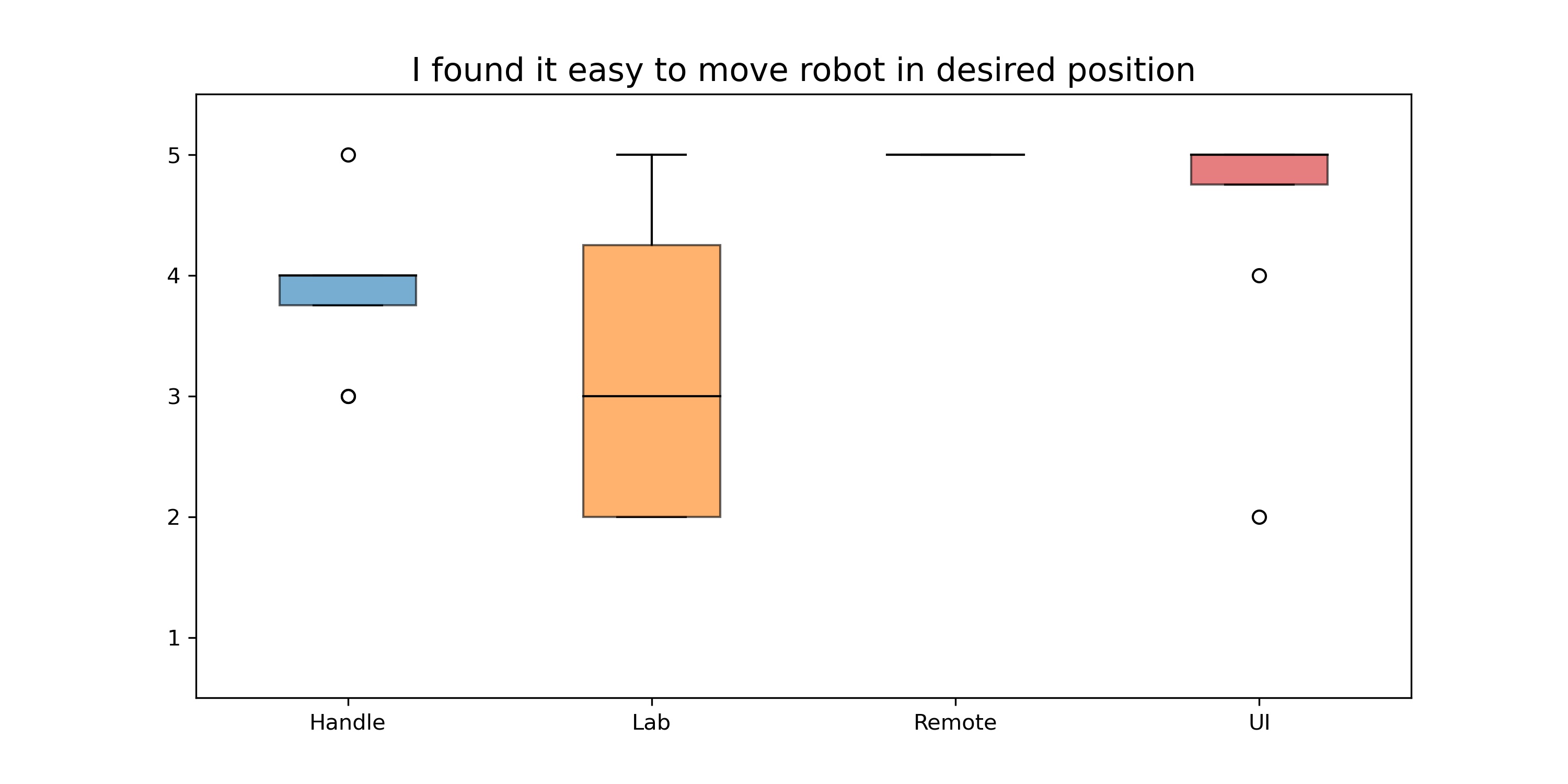

User Study/Google Form/I found it easy to move robot in desired position.jpg

BIN

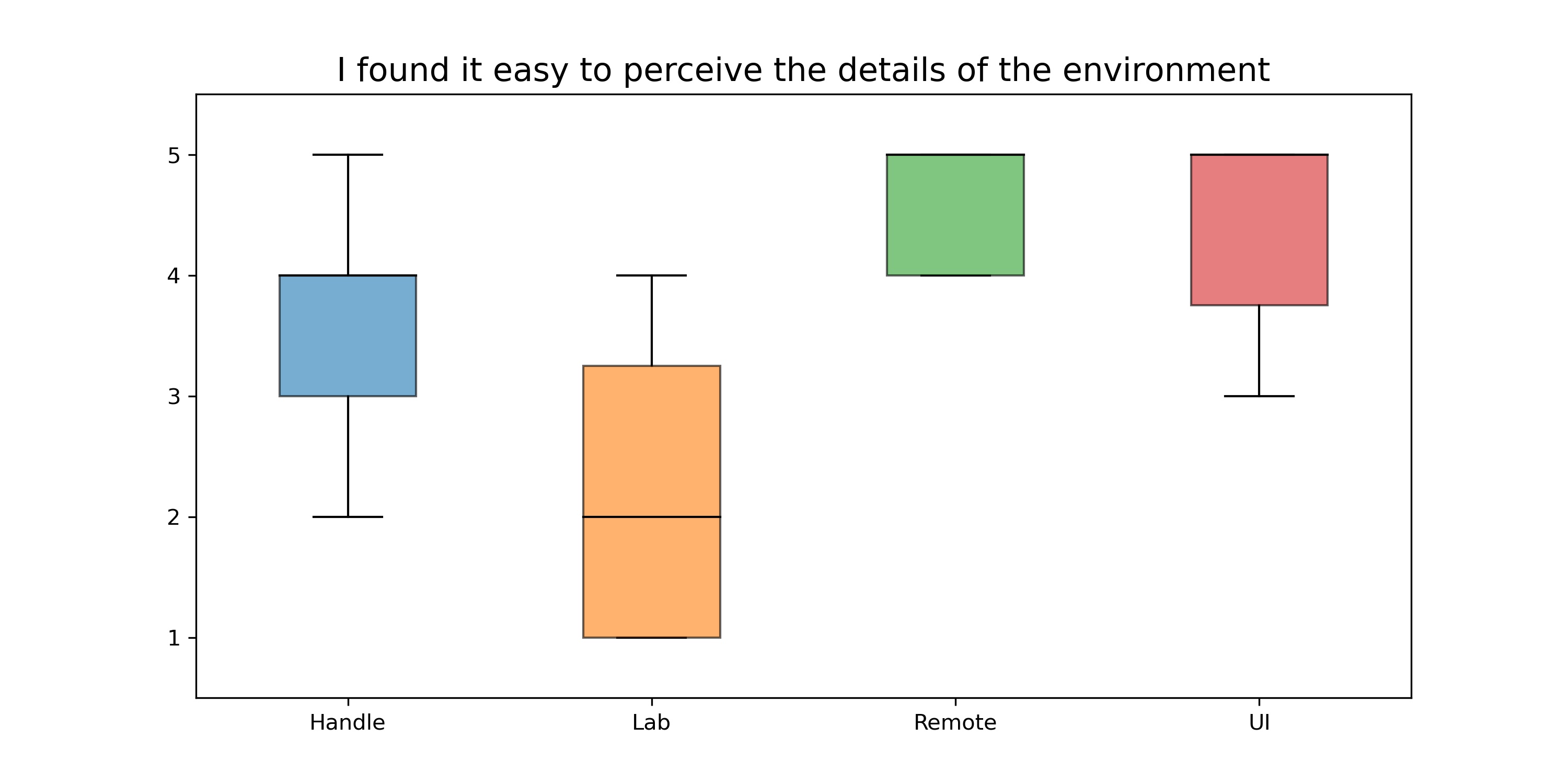

User Study/Google Form/I found it easy to perceive the details of the environment.jpg

+ 1

- 0

User Study/Google Form/statistic.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-10.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-11.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-12.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-5.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 6

- 0

User Study/TLX/HectorVR-6.csv

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-7.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 4

- 0

User Study/TLX/HectorVR-8.csv

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/HectorVR-9.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TLX/Mean.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 33

- 0

User Study/TLX/Merged.csv

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

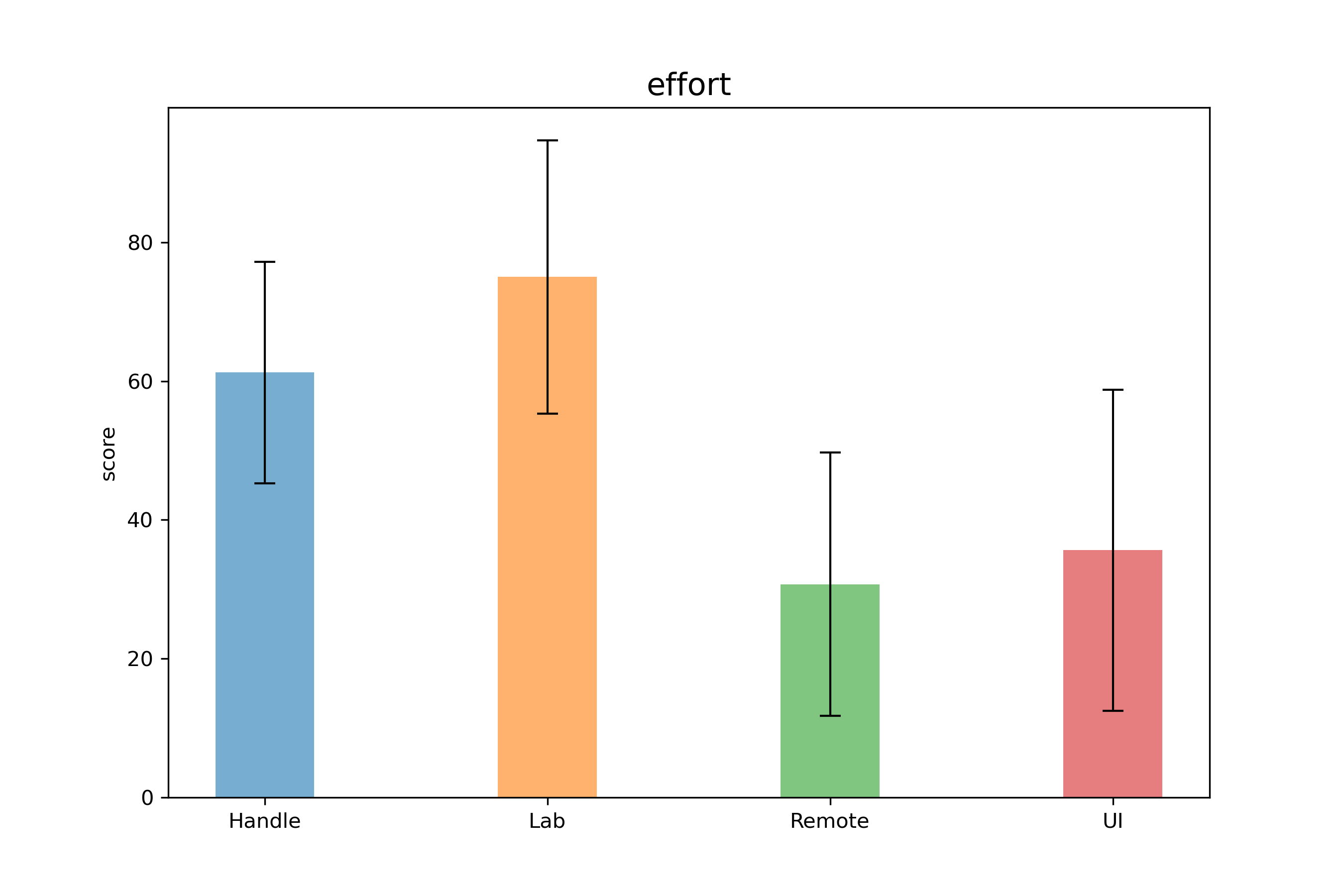

User Study/TLX/effort.png

BIN

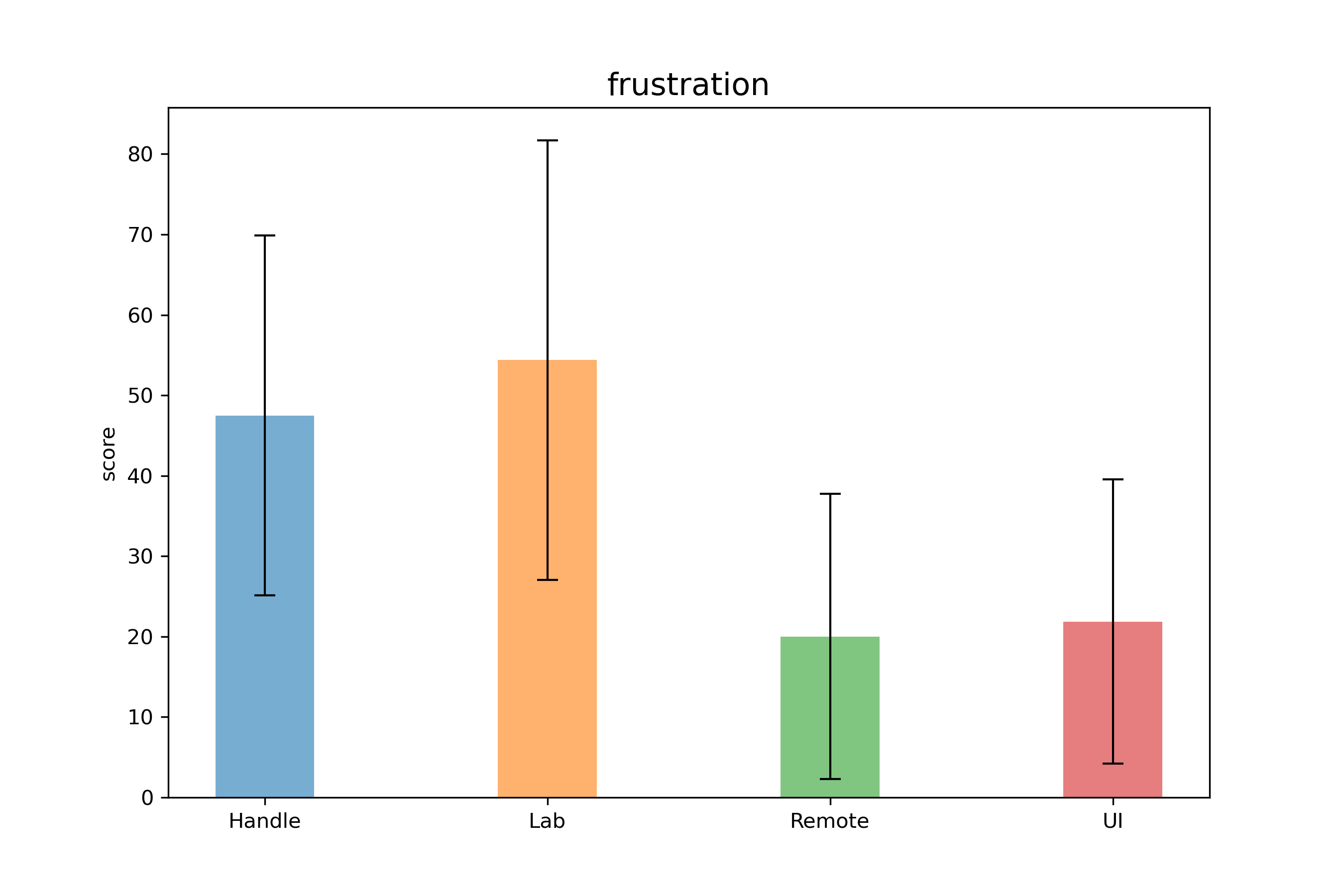

User Study/TLX/frustration.png

BIN

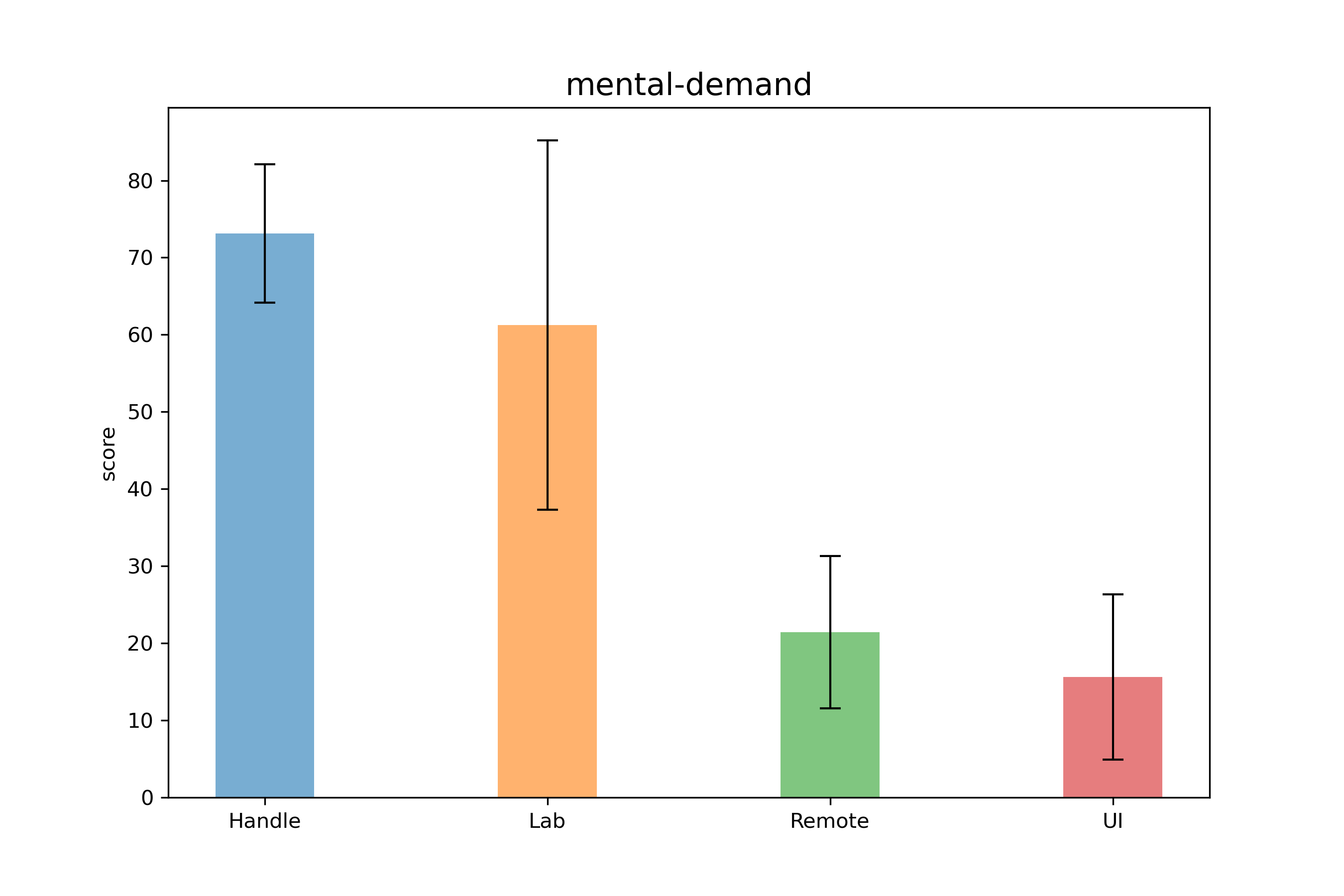

User Study/TLX/mental-demand.png

BIN

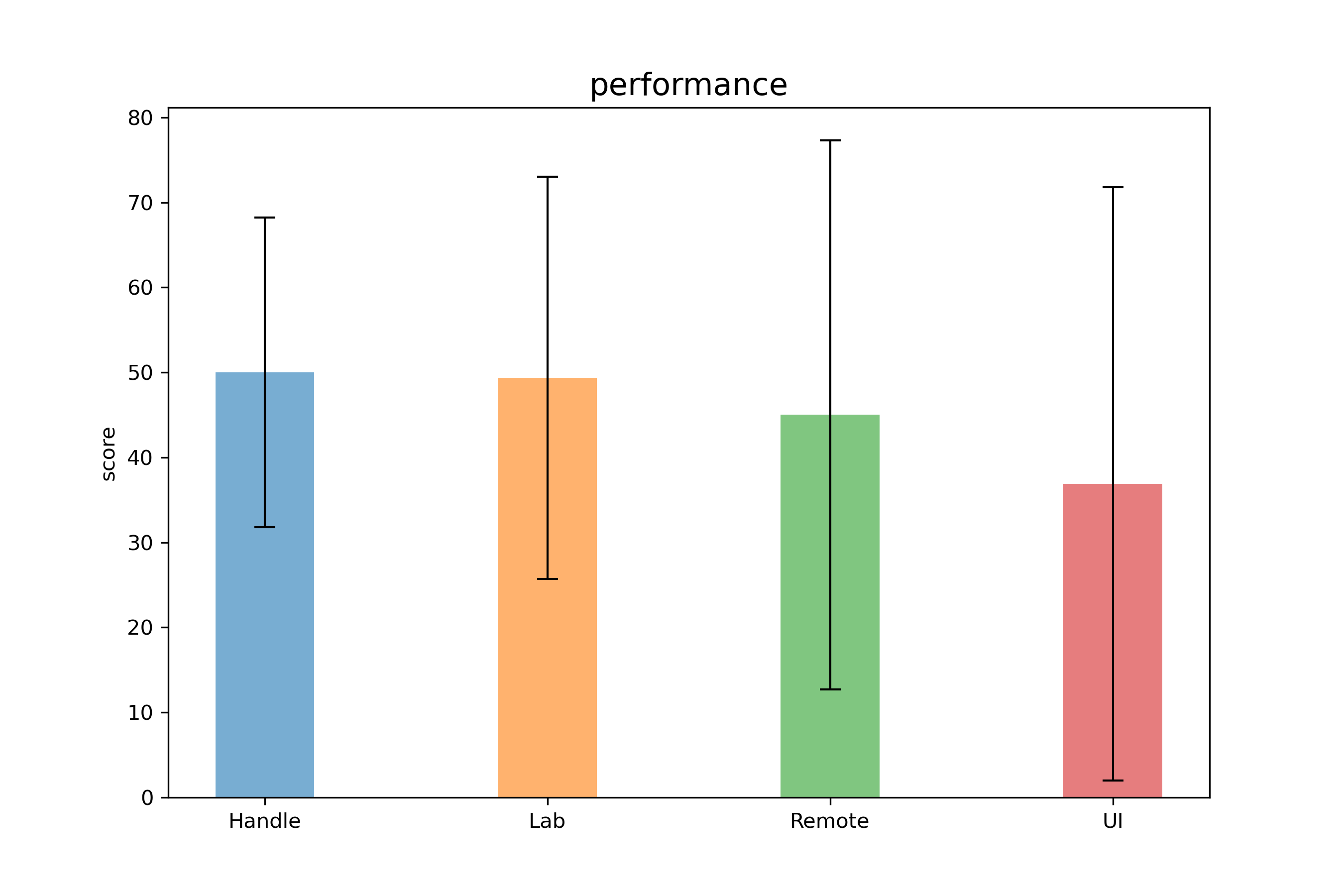

User Study/TLX/performance.png

BIN

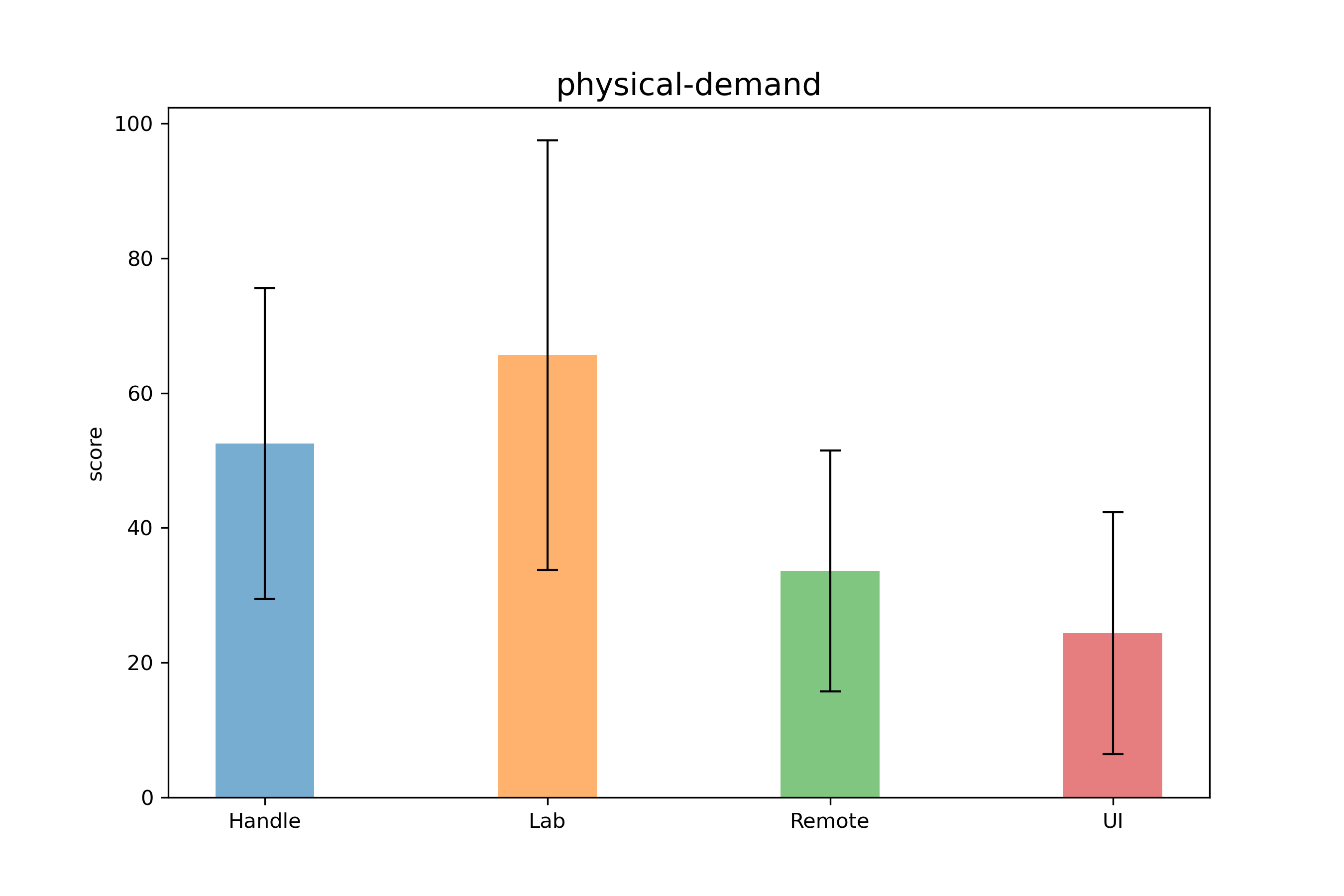

User Study/TLX/physical-demand.png

+ 5

- 0

User Study/TLX/standard_deviation.csv

|

||

|

||

|

||

|

||

|

||

|

||

BIN

User Study/TLX/summary.jpg

BIN

User Study/TLX/temporal-demand.png

BIN

User Study/TLX/total.png

BIN

User Study/TestResult/.DS_Store

+ 0

- 5

User Study/TestResult/1.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/10.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/11.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/12.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 5

User Study/TestResult/2.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 5

User Study/TestResult/3.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 5

User Study/TestResult/4.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/6.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/7.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/8.csv

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 0

User Study/TestResult/9.csv

|

||

|

||

|

||

|

||

|

||

|

||

BIN

User Study/TestResult/Rescue situation.png

BIN

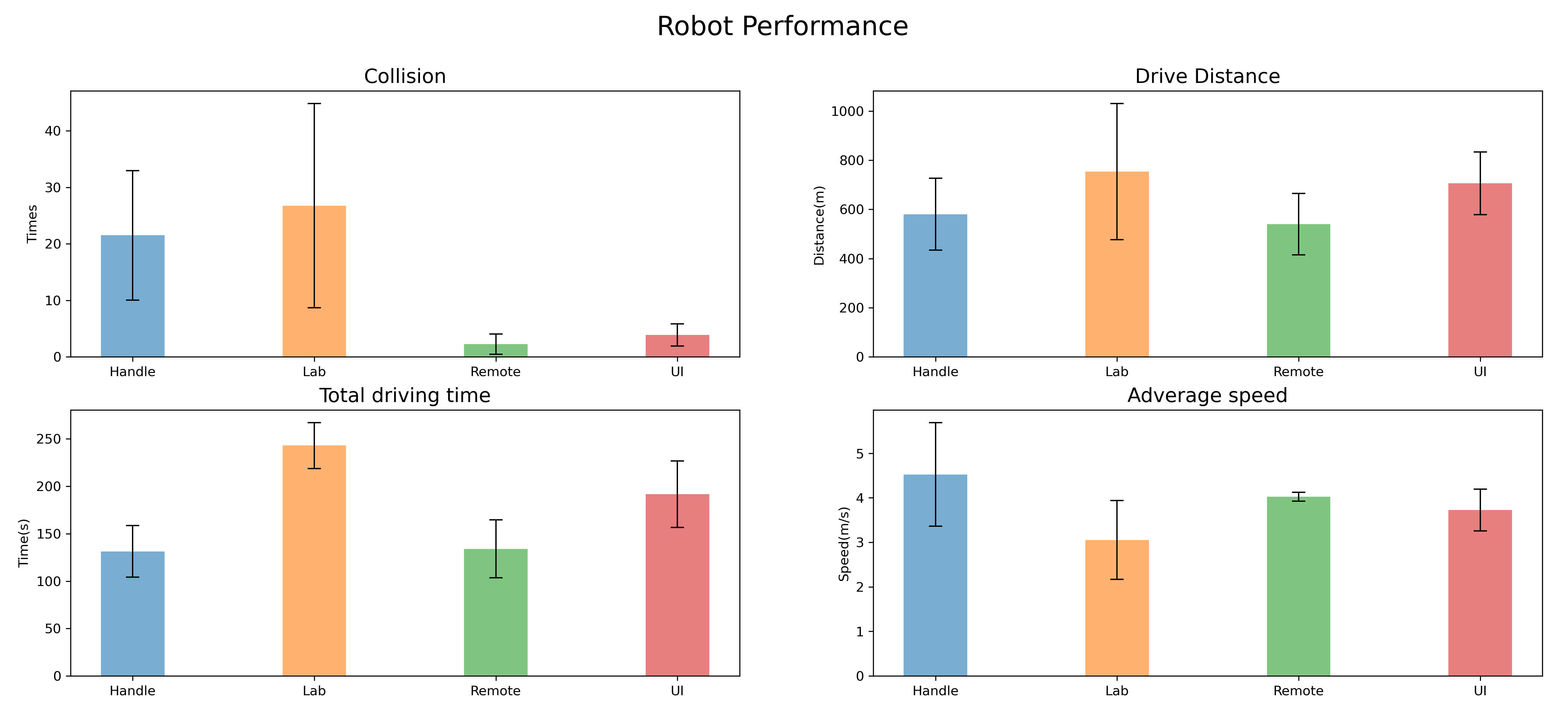

User Study/TestResult/Robot Performance.png

+ 4

- 4

User Study/TestResult/statistic.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||