|

|

@@ -1,48 +1,79 @@

|

|

|

# Hector VR v2.0

|

|

|

|

|

|

-

|

|

|

-

|

|

|

## Introduction

|

|

|

|

|

|

-

|

|

|

+Ground-based rescue robot simulation with 4 operating modes and test scenarios

|

|

|

|

|

|

|

|

|

|

|

|

## Setup

|

|

|

|

|

|

-##### 0.

|

|

|

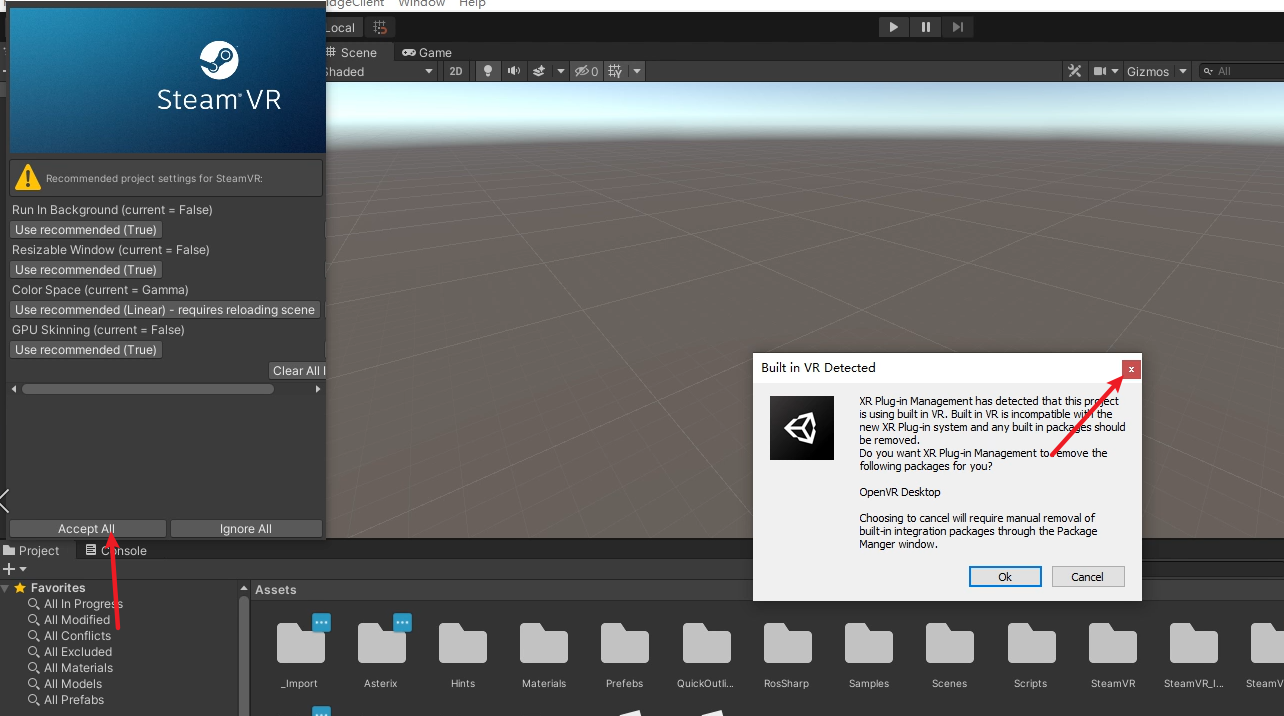

+##### 0. Install

|

|

|

|

|

|

|

|

|

|

|

|

+

|

|

|

+

|

|

|

##### 1. SteamVR Input

|

|

|

|

|

|

-##### 2. NavAgent

|

|

|

+Set SteamVR bindings under `Window>SteamVR Input>Open binding UI`

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

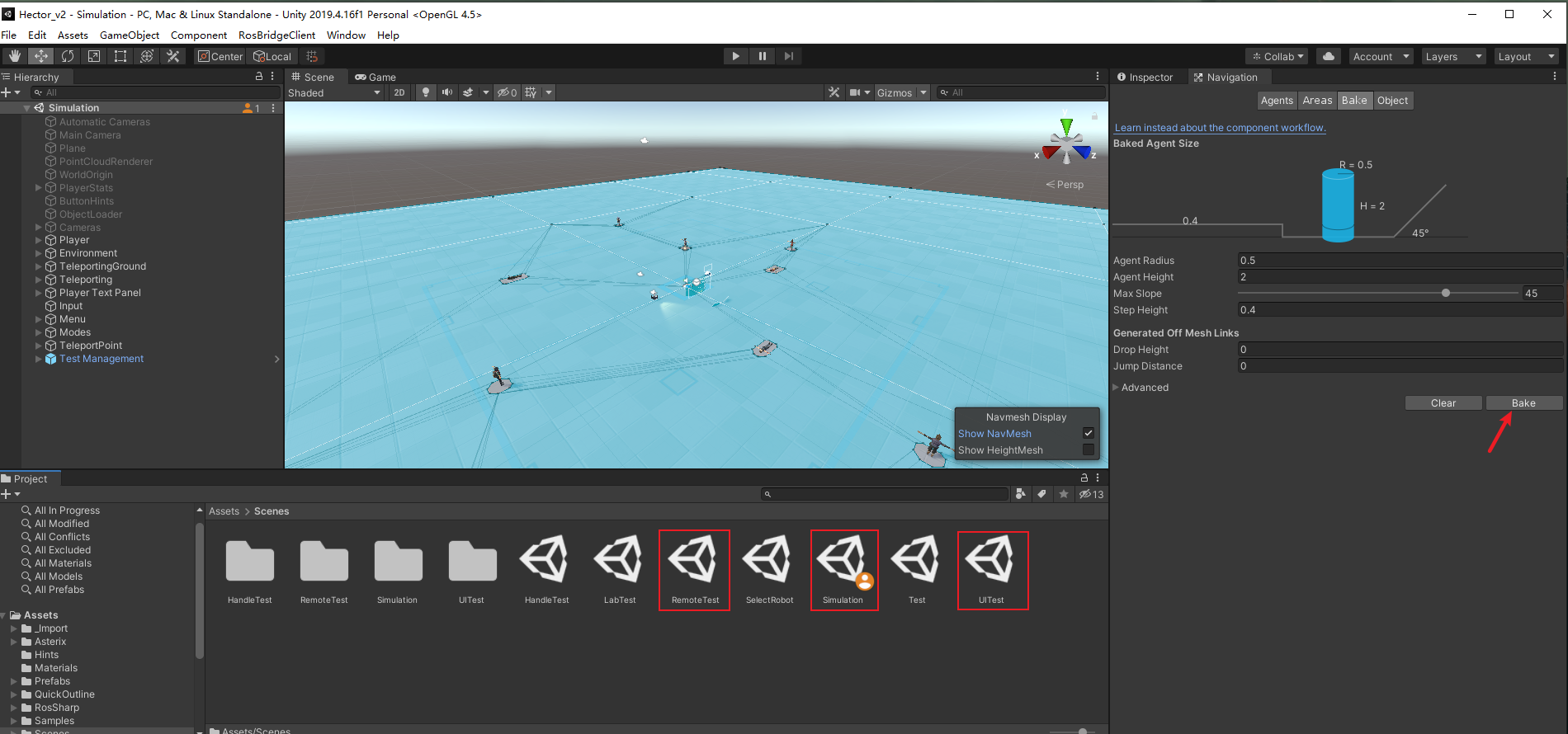

+##### 2. Navmesh Agent

|

|

|

+

|

|

|

+The project uses the NavMesh to allow it to navigate the Scene, so please set the walkable area under `Window>AI>Navigation`.

|

|

|

+

|

|

|

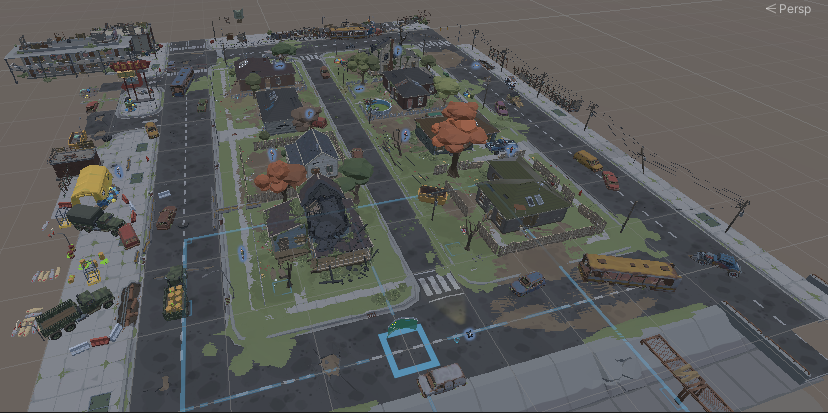

+The following scenarios need to be set: Simulation, RemoteTest and UITest.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

-##### Operation Mode

|

|

|

+## Operation Mode

|

|

|

+

|

|

|

+##### 1. Handle

|

|

|

+

|

|

|

+The user can control the robot directly by operating the handle

|

|

|

|

|

|

-1.

|

|

|

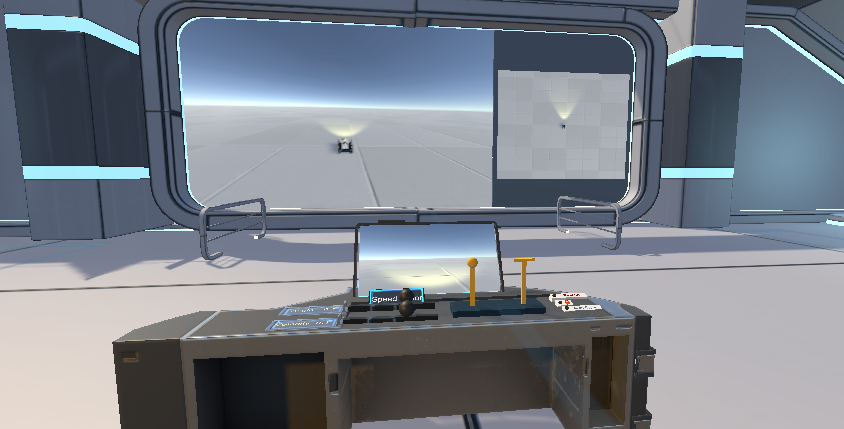

+##### 2. Lab

|

|

|

|

|

|

-2.Lab

|

|

|

+The environment is designed to resemble a real laboratory environment as closely as possible, and is operated by holding the joystick or clicking on buttons with the virtual hand.

|

|

|

|

|

|

|

|

|

|

|

|

-https://github.com/rav3dev/vrtwix

|

|

|

+The part that involves virtual joystick movement and button effects uses an open source github project [VRtwix](https://github.com/rav3dev/vrtwix).

|

|

|

|

|

|

-3.

|

|

|

+##### 3. Remote Mode

|

|

|

|

|

|

-4.UI

|

|

|

+In this mode the user can pick up the tools of operation manually: for example the remote control. Alternatively, the target point can be set directly from the right hand. The robot will automatically run to the set target point.

|

|

|

+

|

|

|

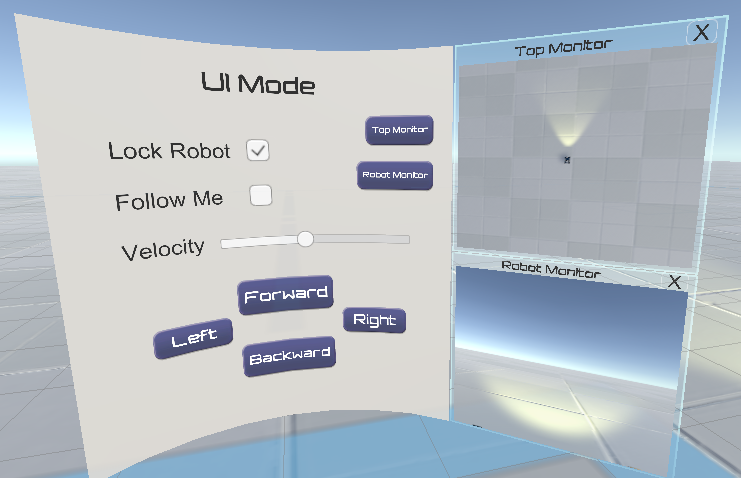

+##### 4. UI Mode

|

|

|

+

|

|

|

+In this mode the user must use the rays emitted by the handle to manipulate the robot. By clicking on the direction button, the user can control the direction of movement of the robot. In addition to this, the user can also turn on the follow function, in which case the robot will always follow the user's position in the virtual world.

|

|

|

|

|

|

|

|

|

|

|

|

-##### Test Scene

|

|

|

|

|

|

-

|

|

|

|

|

|

-

|

|

|

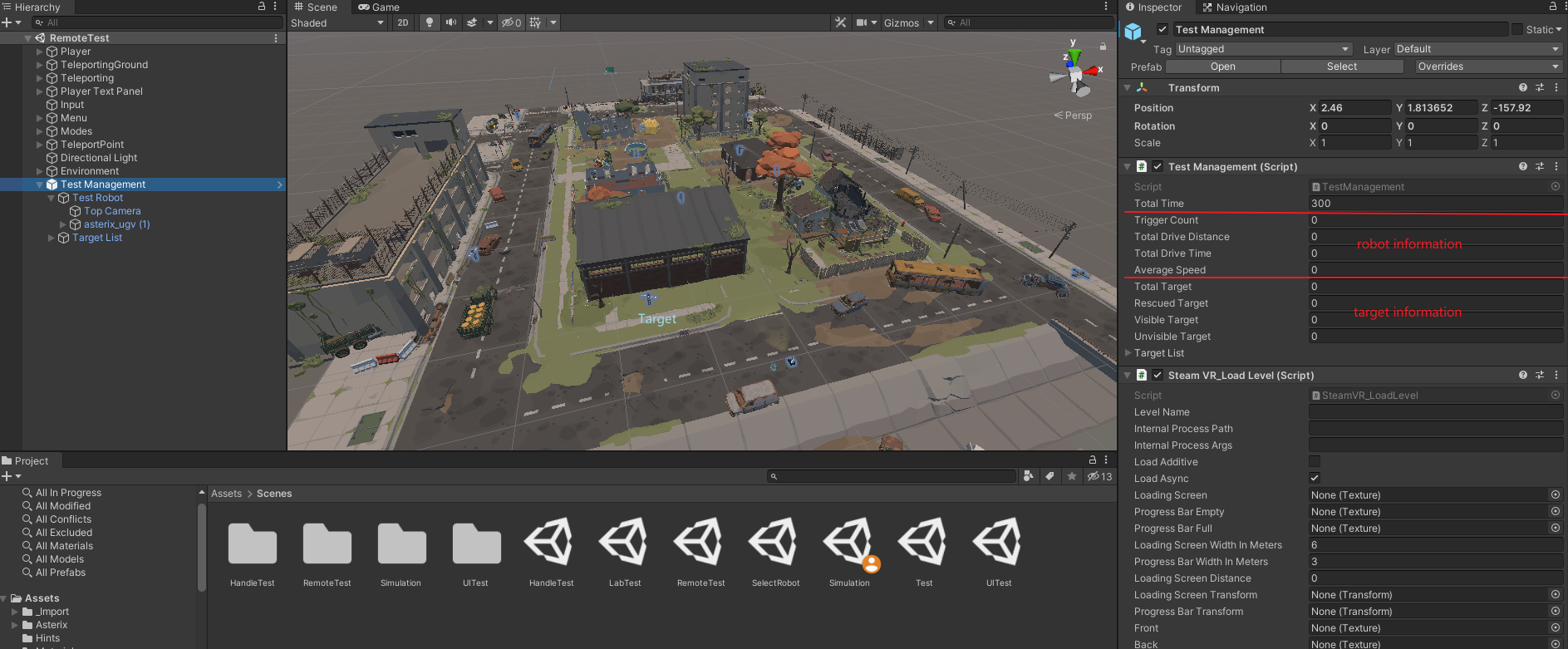

+## Test Scene

|

|

|

+

|

|

|

+In the test scenario, the user is asked to find as many sufferers as possible within a limited period of time. The test is terminated when the time runs out or when all victims have been found.

|

|

|

+Throughout the test, the robot's performance, including the total distance travelled, the average speed travelled and the number of collisions, is recorded. In addition to this, the rescue of victims, for example if they are already in view but ignored by the user, is also recorded.

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

+###### Collision Detection

|

|

|

+

|

|

|

+Each object in the scene that will be used for collision detection comes with a suitable collision body.

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

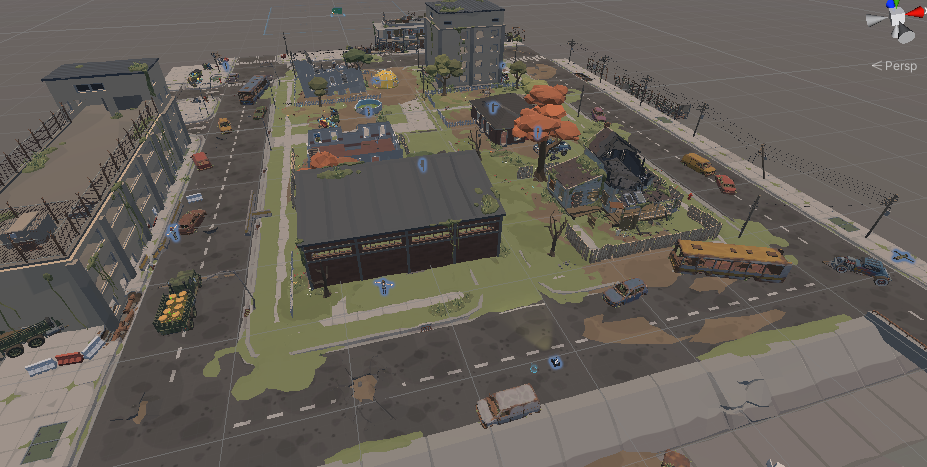

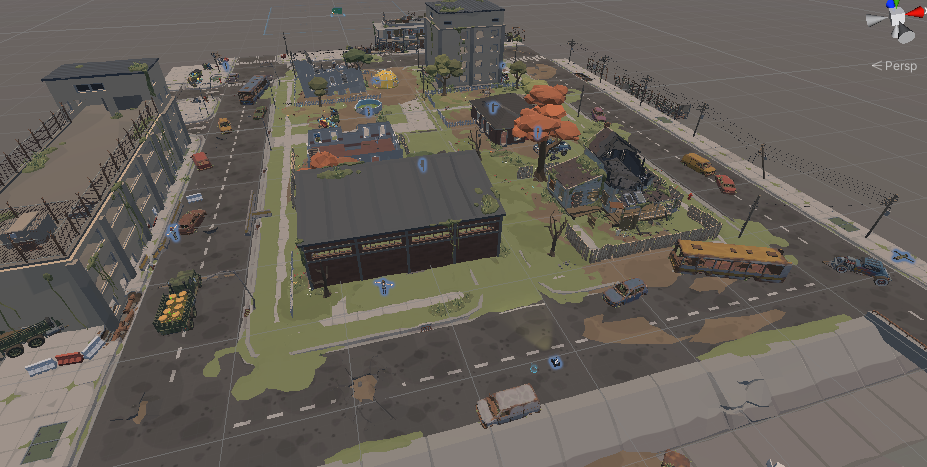

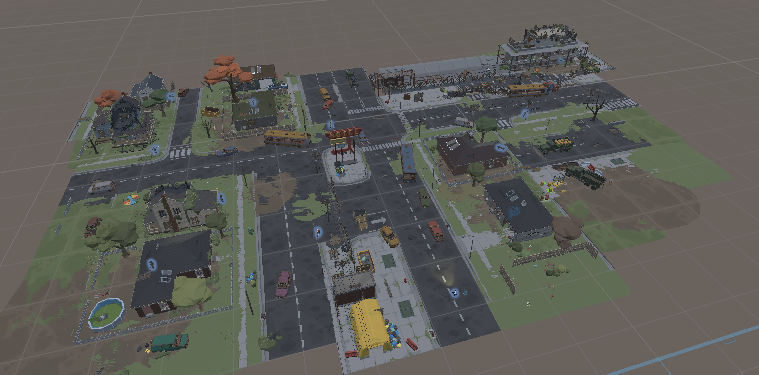

+###### Scene overview

|

|

|

+

|

|

|

+

|

|

|

|

|

|

|

|

|

|

|

|

@@ -50,11 +81,15 @@ https://github.com/rav3dev/vrtwix

|

|

|

|

|

|

|

|

|

|

|

|

-

|

|

|

|

|

|

|

|

|

|

|

|

-#### Robot

|

|

|

+

|

|

|

+

|

|

|

+

|

|

|

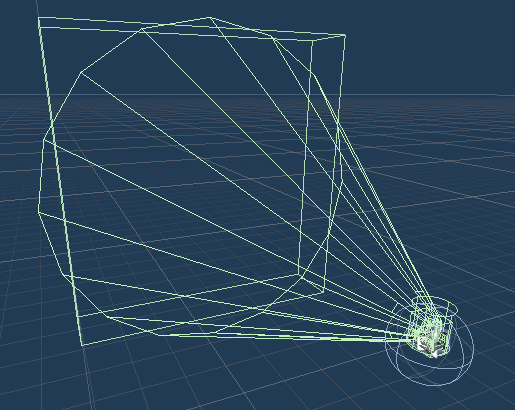

+## Robot

|

|

|

+

|

|

|

+To simulate the process of a robot using a probe camera to detect the real environment and synchronise it to Unity, a conical collision body was set up on the robot.

|

|

|

|

|

|

|

|

|

|