81 커밋 82a98880a2 ... a3ab6c0c1a

| 작성자 | 날짜 |

|---|---|

|

|

3 년 전 |

|

|

3 년 전 |

|

|

3 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

4 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

|

|

5 년 전 |

+ 0

- 12

.gitignore

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 116

.ipynb_checkpoints/README-checkpoint.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 21

LICENSE

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 108

README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 4

data/README.md

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

data/cifar/.gitkeep

+ 0

- 0

data/fashion_mnist/.gitkeep

+ 0

- 0

data/mnist/.gitkeep

+ 0

- 4

requirements.txt

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

save/.gitkeep

BIN

save/MNIST (MLP, IID) FP16 and FP32 Comparison_acc_FP16_32.png

BIN

save/MNIST (MLP, IID) FP16 and FP32 Comparison_loss_FP16_32.png

BIN

save/MNIST_CNN_IID FP16 and FP32 Comparison_acc_FP16_32.png

BIN

save/MNIST_CNN_IID FP16 and FP32 Comparison_loss_FP16_32.png

BIN

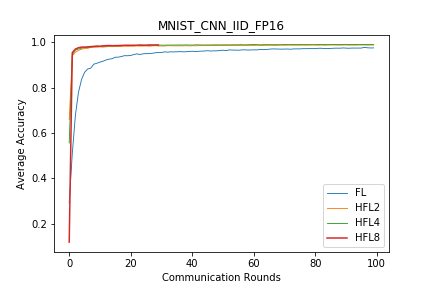

save/MNIST_CNN_IID_FP16_acc_FP16.png

BIN

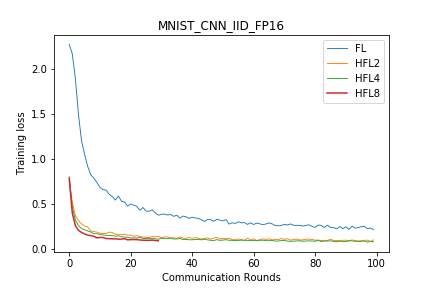

save/MNIST_CNN_IID_FP16_loss_FP16.png

BIN

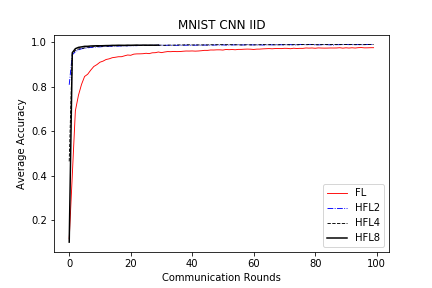

save/MNIST_CNN_IID_acc.png

BIN

save/MNIST_CNN_IID_acc_FP16.png

BIN

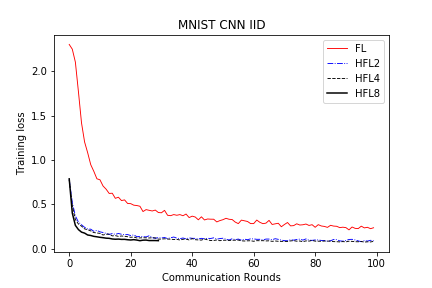

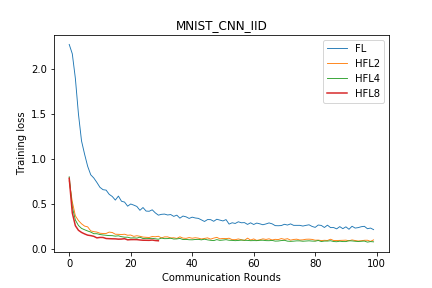

save/MNIST_CNN_IID_loss.png

BIN

save/MNIST_CNN_IID_loss_FP16.png

BIN

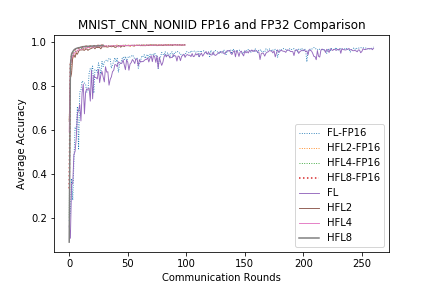

save/MNIST_CNN_NONIID FP16 and FP32 Comparison_acc_FP16_32.png

BIN

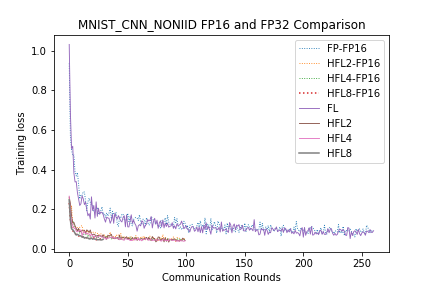

save/MNIST_CNN_NONIID FP16 and FP32 Comparison_loss_FP16_32.png

BIN

save/MNIST_CNN_NONIID_FP16_acc_FP16.png

BIN

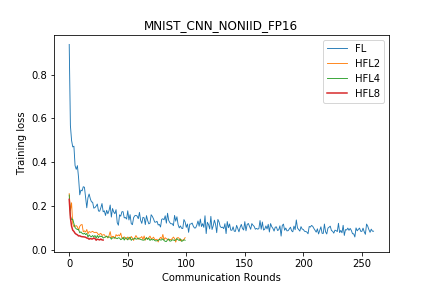

save/MNIST_CNN_NONIID_FP16_loss_FP16.png

BIN

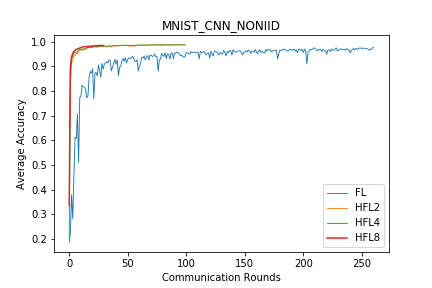

save/MNIST_CNN_NONIID_acc.png

BIN

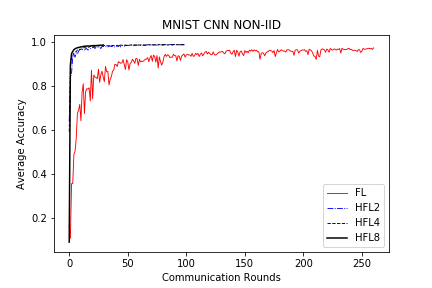

save/MNIST_CNN_NONIID_acc_FP16.png

BIN

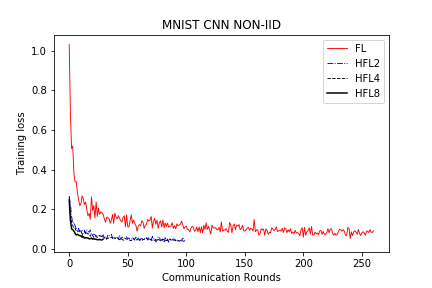

save/MNIST_CNN_NONIID_loss.png

BIN

save/MNIST_CNN_NONIID_loss_FP16.png

BIN

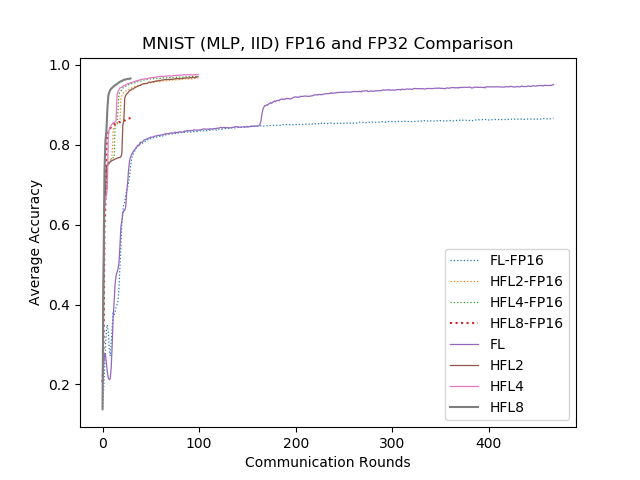

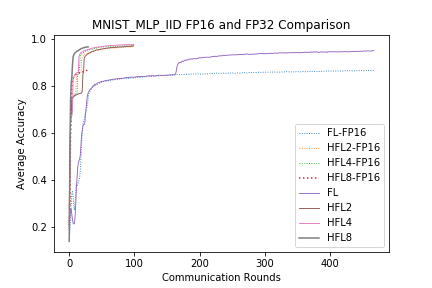

save/MNIST_MLP_IID FP16 and FP32 Comparison_acc_FP16_32.png

BIN

save/MNIST_MLP_IID FP16 and FP32 Comparison_loss_FP16_32.png

BIN

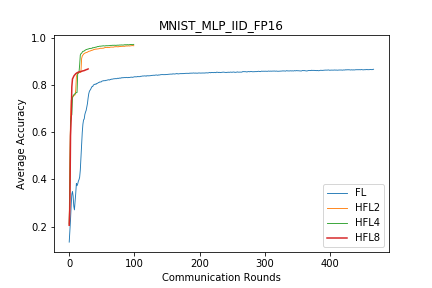

save/MNIST_MLP_IID_FP16_acc_FP16.png

BIN

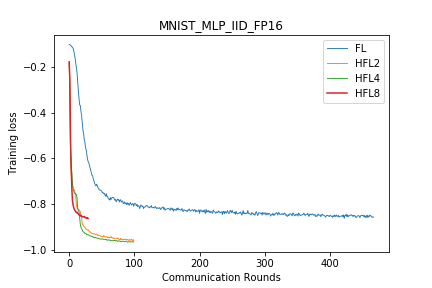

save/MNIST_MLP_IID_FP16_loss_FP16.png

BIN

save/MNIST_MLP_IID_acc.png

BIN

save/MNIST_MLP_IID_acc_FP16.png

BIN

save/MNIST_MLP_IID_loss.png

BIN

save/MNIST_MLP_IID_loss_FP16.png

BIN

save/MNIST_MLP_NONIID FP16 and FP32 Comparison_acc_FP16_32.png

BIN

save/MNIST_MLP_NONIID FP16 and FP32 Comparison_loss_FP16_32.png

BIN

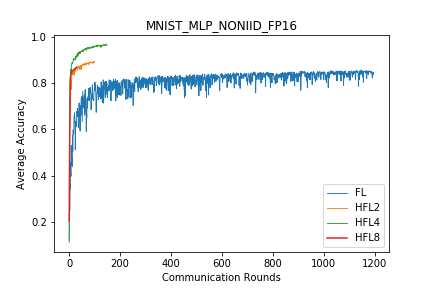

save/MNIST_MLP_NONIID_FP16_acc_FP16.png

BIN

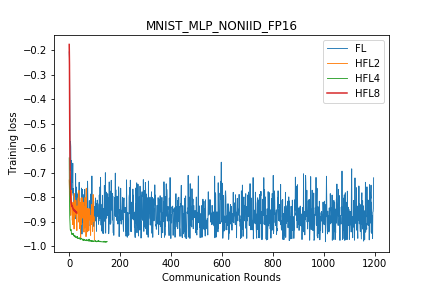

save/MNIST_MLP_NONIID_FP16_loss_FP16.png

BIN

save/MNIST_MLP_NONIID_acc.png

BIN

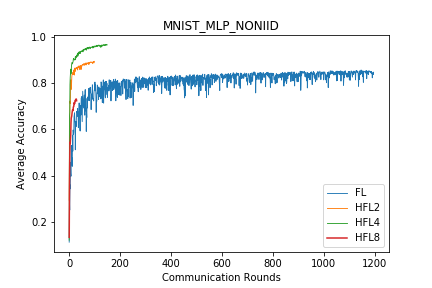

save/MNIST_MLP_NONIID_acc_FP16.png

BIN

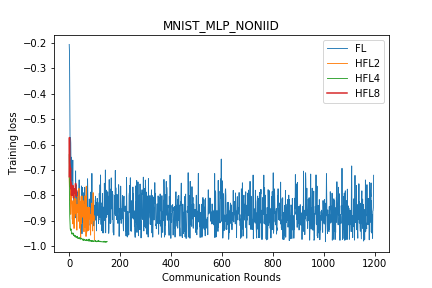

save/MNIST_MLP_NONIID_loss.png

BIN

save/MNIST_MLP_NONIID_loss_FP16.png